no title

My presentation of Methods of Concurrency at CocoaHeads Stockholm 2013-02. Don’t miss the rest of the talks from that evening!

My presentation of Methods of Concurrency at CocoaHeads Stockholm 2013-02. Don’t miss the rest of the talks from that evening!

My programmer world was turned up-side down when I first saw the new ’await’ keyword in C# about a year ago, and I’ve wanted to share all the thoughts that arose from that since, but never got around to it.

In the past few evenings, I’ve written some tools that I can’t not share with you, and thus we begin. You can skip the background chapters if you’ve already seen the video linked above. The code is available on Github.

tl;dr: Building an application using a GCD queue for every class, simple NSInvocation-based dispatch between threads, and Tasks (stolen from .Net) to get results of asynchronous operations and chaining them together.

This article starts with five chapters on background: in what asynchrony is, how such code gets messy, my favorite solution of pretending there is no problem, how C# generalizes asynchronous operations as ‘tasks’ to gain enormous strength in generalizing asynchronous problems, and the new in C# 5 keywords 'async’ and 'await’.

Then comes five chapters on my own creations: I replicate C#’s Task in Objective-C as the basic building block for everything else, and delve into its implementation. Then I move all my classes onto their own GCD work queues and call them 'agents’. I go through invocation grabbers again, because I use them to simplifly cross-thread communication with sp_agentAsync together with SPTask. Finally I delve into the implementation of sp_agentAsync.

In the summary, this code is disected, and hopefully by then, you will understand every part of it:

- (SPTask*)funkifyImageAndPublishResult:(NSURL*)link

{

return [[[[[_backendService sp_agentAsync] requestURL:url] chain] then:^ id (NSData *response) {

UIImage *image = [UIImage imageFromData:response];

return [image funkyTransform];

} on:dispatch_get_global_queue(0,0)] chain:^ SPTask*(UIImage *image) {

return [_imageHostingService postImageAndReturnImageURL:image];

} on:_imageHostingService.workQueue];

}Just to make things clear, let’s sort asynchrony into three categories:

If at all possible, many programmers choose to tackle asynchrony by opting out of it entirely. In some cases, this way of doing networking is acceptable:

while(true) {

var imageLength = toInt(read(socket, 2));

var image = read(socket, imageLength);

var mirroredImage = mirrorImage(image)

write(socket, length(mirroredImage))

write(socket, mirroredImage)

}If that code is part of UI, or needs to do any other thing than the above, the entire paradigm becomes unacceptable. A network read might take minutes, and image processing isn’t cheap, either.

Over the years, many patterns have emerged to deal with asynchrony. Some of them are, in rough order of evolution:

#2 is quite popular in Objective-C, as we have GCD (mikeash plug!) to help us out, and GCD is closure based. However, if you’re not careful, your code will end up looking like this:

- (void)handleOneRequest;

{

// async I/O

[socket readUInt32ToCompletion:^(uint32_t length) {

[socket readToLength:length completion:^(NSData *data) {

dispatch_async(dispatch_get_global_queue(0,0) {

// async processing

UIImage *image = [UIImage imageFromData:data];

UIImage *mirrored = [image mirror];

NSData *pngBytes = [image pngData];

[socket writeUInt32:pngBytes.length completion:^ {

[socket writeData:pngBytes completion:^{

[self handleOneRequest];

}];

}];

});

}];

}];

}Yuck. And it gets way worse when you add error handling. The same level of asynchrony using either callbacks or delegates could get almost as messy. So, instead…

Gevent is a popular Python library among Spotify backend developers, and one that fascinates me. Gevent enforces cooperative multithreading on process-level threads (“green threads”), suspending execution when anyone does a blocking call. You can think of it as methods becoming coroutines, yielding whenever it would block. By patching system libraries, the following code, which looks synchronous, actually becomes asynchronous.

def print_head(url):

print ('Starting %s' % url)

data = urllib2.urlopen(url).read()

print ('%s: %s bytes: %r' % (url, len(data), data[:50]))

jobs = [gevent.spawn(print_head, url) for url in urls]The above code code will fetch all the headers at the URLs in 'urls’ almost simultaneously. The implementation of 'print_head’ pays no attention to asynchrony at all, and looks completely asynchronous. The problem has become invisible. That this is possible using just a library is a testament to the flexibility of Python.

C# 5 has new features to try to reach the same level of invisibility. They have been designed to be a little more explicit, and are added as the language features 'async’ and 'await’ rather than as a library. But before we can talk about them, we need to introduce the older concept of Tasks.

First, we realize that we can probably generalize the concept of “do something and notify me when it’s done”. One such generalization is the future/promise (obligatory mikeash plug), but it seems that there are many situations in which they will block, which is unacceptable for UI code.

In .Net, every asynchronous action is instead wrapped in a System.Threading.Tasks.Task. At its core, Task is almost a value class, just holding callbacks to be called when an operation is done, seemingly so trivial that it’s not even worth an abstraction. But once you have that abstraction, you can throw so many things at it. To enumerate a few in .Net:

Back to fancy language features. In C#, the ’async’ keyword will transform a method into a special coroutine. The ’await’ keyword is basically a coroutine ’yield’. Let’s take this (slightly fictitious) synchronous code:

private byte[] GetURLContents(string url) {

var content = new MemoryStream();

HttpWebRequest request = WebRequest.Create(url);

WebResponse response = request.GetResponse(); // <-- network call, blocks!

Stream responseStream = response.GetResponseStream();

responseStream.copyTo(content); // <-- network call, blocks!

return content.ToArray();

}… and turn it asynchronous:

private async Task<byte[]> GetURLContents(string url) {

var content = new MemoryStream();

HttpWebRequest request = WebRequest.Create(url);

WebResponse response = await request.GetResponseAsync(); // <-- yields and returns from this method!

Stream responseStream = response.GetResponseStream();

await responseStream.copyToAsync(content); // <-- yields and returns from this method!

return content.ToArray();

}Note the slight changes, highlighted in bold. Making a method ’async’ means that instead of returning the value, it returns a task representing the future delivery of that value. Since the caller now has a task, we have all eternity to perform the requested action, and will never block the caller.

We can pretend that the method has been cut into three pieces: the part before the first await keyword, the part between the first and second await keyword, and the part after the second await keyword. We have a chain of three Tasks. Since I don’t really know C#, the language I will use below does not exist:

private Task<byte[]> GetURLContentsAsync(string url)

{

var content = new MemoryStream();

HttpWebRequest request = WebRequest.Create(url);

Task<WebResponse> responseTask = request.GetResponseAsync();

Task task2 = responseTask.startAndCallback(^(WebResponse response) {

Stream responseStream = response.GetResponseStream();

Task copyTask = responseStream.CopyToAsync(content);

Task<byte[]> bytesTask = copyTask.startAndCallback(^ {

return content.ToArray();

});

return bytesTask;

};

return task2;

}This method contains three explicit tasks rather than implicit, and they are chained together, one starting when the previous one completes; and the method returns the first task in the chain, that sets it all off.

It seems that Apple felt they were done once GCD was out the door. Indeed, GCD is an amazing library, but it only helps us with where to execute our code, not with higher level questions like “how do I compose these operations” or even “how do I know when the work is done”?, nor does it try to. Microsoft has upped the game, and I hope Apple follows (and surpasses, of course).

Until then, we make do with the tools we have. Before C# 5, .Net programmers had to make do without language support for asynchrony. The Task class achieves this with the “continueWith” method. This is a perfect recipe for something to replicate in our language of choice, and thus I built SPTask in the same vein. Let’s try out its header:

typedef void(^SPTaskCallback)(id value);

typedef void(^SPTaskErrback)(NSError *error);

typedef id(^SPTaskThenCallback)(id value);

typedef SPTask*(^SPTaskChainCallback)(id value);

/** @class SPTask

@abstract Any asynchronous operation that someone might want to know the result of.

*/

@interface SPTask : NSObject

/** @method addCallback:on:

Add a callback to be called async when this task finishes, including the queue to

call it on. If the task has already finished, the callback will be called immediately

(but still asynchronously)

@return self, in case you want to add more call/errbacks on the same task */

- (instancetype)addCallback:(SPTaskCallback)callback on:(dispatch_queue_t)queue;

/** @method addErrback:on:

Like callback, but for when the task fails

@return self, in case you want to add more call/errbacks on the same task */

- (instancetype)addErrback:(SPTaskErrback)errback on:(dispatch_queue_t)queue;

/** @method then:on:

Add a callback, and return a task that represents the return value of that

callback. Useful for doing background work with the result of some other task.

This task will fail if the parent task fails, chaining them together.

@return A new task to be executed when 'self' completes, representing

the work in 'worker'

*/

- (instancetype)then:(SPTaskThenCallback)worker on:(dispatch_queue_t)queue;

/** @method chain:on:

Add a callback that will be used to provide further work to be done. The

returned SPTask represents this work-to-be-provided.

@return A new task to be executed when 'self' completes, representing

the work provided by 'worker'

*/

- (instancetype)chain:(SPTaskChainCallback)chainer on:(dispatch_queue_t)queue;

...

@end

/** @class SPTaskCompletionSource

Task factory for a single task that the caller knows how to complete/fail.

*/

@interface SPTaskCompletionSource : NSObject

/** The task that this source can mark as completed. */

- (SPTask*)task;

/** Signal successful completion of the task to all callbacks */

- (void)completeWithValue:(id)value;

/** Signal failed completion of the task to all errbacks */

- (void)failWithError:(NSError*)error;

@end

If the only method we had on SPTask was addCallback:, we would still be in the ’yuck’ situation from above. ’then:’ and ’chain:’ to the rescue! They lets us write code that looked just like the await code in C#, except it’s indented one level:

- (SPTask*)getURLContents(NSURL *URL) {

NSURLRequest *request = [NSURLRequest requestWithURL:URL];

return [[request sp_getResponse] chain:^ SPTask *(NSURLResponse *response) ^{

return [response sp_readDataUntilEOF];

}];

}The signature of the hypothetical sp_getResponse would be -(SPTask/*<NSURLResponse>*/)sp_getResponse; (yes, I’m still pretending that Objective-C has generics). It’s a method that immediately returns an SPTask, that when completes will yield an NSURLResponse to its callbacks. We use this task to chain it together with a new operation. When we get the response (that represents just the headers, not the whole data response), we know that we will immediately want to start reading the entire HTTP payload into an NSData object. The signature of the hypothetical sp_readDataUntilEOF would be -(SPTask/*<NSData>*/)sp_readDataUntilEOF;. Returning the ’readData…’ Task from the ’chain:’ block creates a new SPTask that represents the whole chain of getResponse > response done > request data > data has arrived. The caller of getURLContents can now add a callback to the SPTask she receives, and get the full data contents of the URL.

When comparing this code to the C# example, each ’return’ is equivalent to a C# ’await’, because we have chopped the method up into smaller blocks, manually creating a coroutine (a method that can be resumed from where it last left off).

Let’s look at an involved example.

- (SPTask*)handleOneRequest

{

__block NSData *pngBytes;

return [[[[socket readUInt32] chain:^(NSNumber *length) {

return [socket readToLength:[length intValue]];

}] chain:^(NSData *imageData) {

UIImage *image = [UIImage imageFromData:data];

UIImage *mirrored = [image mirror];

pngBytes = [image pngData];

return [socket writeUInt32:pngBytes.length];

} on:dispatch_get_global_queue(0,0)] addCallback:^(id _) {

[socket writeData:pngBytes];

}];

}This example has the exact same level of asynchrony as ’yuck’, but because we can chain instead of nest, the method is much more readable. Let’s look at the calling side.

[[[self handleOneRequest] addCallback:^(id _) {

[self doMoreStuff];

}] addErrback:^(NSError *err) {

NSLog(@"Error handling the request! %@", err);

}];Since exceptions aren’t used the way they are in C#, I had to steal 'errbacks’ from the python framework Twisted. They work like the normal 'callback’, except it’s called when an error happens. Chaining together tasks will also chain together errors, so in the case of handleOneRequest, errors will be propagated all the way up to the caller.

SPTask is such a simple class that we might as well take a closer look at it.

@interface SPTask ()

{

NSMutableArray *_callbacks;

NSMutableArray *_errbacks;

BOOL _isCompleted;

id _completedValue;

NSError *_completedError;

}

@end

@implementation SPTask

- (id)init

{

if(!(self = [super init]))

return nil;

_callbacks = [NSMutableArray new];

_errbacks = [NSMutableArray new];

return self;

}We track all callbacks, errbacks, and whether this task has finished.

- (instancetype)addCallback:(SPTaskCallback)callback on:(dispatch_queue_t)queue

{

@synchronized(_callbacks) {

if(_isCompleted) {

if(!_completedValue) {

dispatch_async(queue, ^{

callback(_completedValue);

});

}

} else {

[_callbacks addObject:[[SPCallbackHolder alloc] initWithCallback:callback onQueue:queue]];

}

}

return self;

}

- (instancetype)addErrback:(SPTaskErrback)errback on:(dispatch_queue_t)queue

{

@synchronized(_errbacks) {

if(_isCompleted) {

if(_completedError) {

dispatch_async(queue, ^{

errback(_completedError);

});

}

} else {

[_errbacks addObject:[[SPCallbackHolder alloc] initWithCallback:errback onQueue:queue]];

}

}

return self;

}If the task has already finished, we call the callback immediately with the value we have stored. Otherwise, we store it away for later. We also return self, so that we can chain more calls onto it, such as an errback, of if the caller is returning it to another piece of code that also want to know about this task’s completion.

- (instancetype)then:(SPTaskThenCallback)worker on:(dispatch_queue_t)queue

{

SPTaskCompletionSource *source = [SPTaskCompletionSource new];

SPTask *then = [source task];’then::’ is the method you would call instead of “addCallback” when you want to add some background work to a chain of tasks. We start off by creating the new task, representing this background work. To show off how to use a completion source (the public API), I create the task through a source rather than directly, although the source just forwards to the private task API.

[self addCallback:^(id value) {We can’t do that work until we have the result of the current task, so we wait for it with an addCallback.

id result = worker(value);

[source completeWithValue:result];

} on:queue];Then we start doing the work. We just do it synchronously inline, since we have already been asynchronously dispatched to the requested queue. When we have the result, we complete our new 'then’ task with that value. All the callbacks will now be called for it.

[self addErrback:^(NSError *error) {

[source failWithError:error];

} on:queue];We also add an errback on self, so that our ’then’ task will fail if the original task fails.

return then;

}

We’re done! Returning the task to the caller, who can now wait for the background task. Onto the next method.

- (instancetype)chain:(SPTaskChainCallback)chainer on:(dispatch_queue_t)queue

{

SPTaskCompletionSource *chainSource = [SPTaskCompletionSource new];

SPTask *chain = [chainSource task];’chain::’ is the method you would call instead of ’then::’ when what you want to do is to ask someone else to do work with the data from the callback, rather than doing work yourself. The ChainCallback returns a task, which we will be waiting for using the ’chain’ task we create here.

[self addCallback:^(id value) {

SPTask *workToBeProvided = chainer(value);When ’self’ is done generating its value, we ask the callback for the task we should wait for.

[workToBeProvided addCallback:^(id value) {

[chainSource completeWithValue:value];We add a callback to this new task, and use the new ’chain’ task we created at the beginning of the method to signal its completion.

} on:queue];

[workToBeProvided addErrback:^(NSError *error) {

[chainSource failWithError:error];

} on:queue];If the work-to-be-provided fails, we fail the whole chain.

} on:queue];

[self addErrback:^(NSError *error) {

[chainSource failWithError:error];

} on:queue];If self fails, we fail the whole chain too.

return chain;

}

We now have a task to return to the caller, that represents the completion of both ’self’, and the task provided from the callback.

- (instancetype)chain

{

return [self chain:^SPTask *(id value) {

return value;

} on:dispatch_get_global_queue(0, 0)];

}’chain’ is just a convenience method for when the value this task yields is itself is a task. We will talk about it in depth later on.

- (void)completeWithValue:(id)value

{

@synchronized(_callbacks) {

_isCompleted = YES;

_completedValue = value;

for(SPCallbackHolder *holder in _callbacks) {

dispatch_async(holder.callbackQueue, ^{

holder.callback(value);

});

}

[_callbacks removeAllObjects];

[_errbacks removeAllObjects];

}

}completeWithValue: is exposed by SPTaskCompletionSource, not Task itself, but this is the implementation that will be called. It simply dispatches the requested callback to the requested queue, with the value that we completed the task with. It also saves the value into _completedValue, in case someone adds a callback to the task after it has finished (see addCallback:: to see how this is done).

- (void)failWithError:(NSError*)error

{

@synchronized(_errbacks) {

_isCompleted = YES;

_completedError = error;

for(SPCallbackHolder *holder in _errbacks) {

dispatch_async(holder.callbackQueue, ^{

holder.callback(error);

});

}

[_callbacks removeAllObjects];

[_errbacks removeAllObjects];

}

}

@endThe same goes for errbacks, except we save the error rather than the value.

In the previous chapter, I ignored the ’on:’ argument on the Task methods, and didn’t really focus on it in this chapter. Let’s take a detour from task abstractions and talk about Grand Central Dispatch, before getting back to it.

My day job is writing code for some streaming music company. My main annoyance with that product is that it stops and thinks too much (although not nearly as often as iTunes, phew!), doing too much work on the main thread. When working on my latest pet project, my counter reaction is thus to make sure no part of my system blocks any other part in a synchronous manner. Thus, SPAgent was born:

/**

Experimental multithreading primitive: An object conforming to SPAgent is not thread safe,

but it runs in its own thread. To perform any of the methods on it, you must first dispatch to its

workQueue.

*/

@protocol SPAgent <NSObject>

@property(nonatomic,readonly) dispatch_queue_t workQueue;

@end

Any object conforming to the SPAgent protocol is thus communicating that you MUST NOT call any method on it from any other queue than its workQueue, which is a private GCD dispatch queue that it owns and on which it does all its work. We are thereby externalizing synchronization to the caller, letting the caller use whatever method is most appropriate at that call point. It feels like such a bad idea to externalize what feels like an implementation detail like that, but so far I’m very pleased with the pattern. It’s very Erlang-y, a language where you do all major communication between “objects” as messages between processes, even as a part of the language syntax. The implementation of an agent is also very clean: you don’t have to do any locking anywhere, and you are guaranteed that only one method is being called at a time, so it’s very easy to reason about the internal state of your object.

Let’s explore an example.

MasterControlProgram *mcp = [MasterControlProgram new];

dispatch_async([mcp workQueue], ^{

int leetAnswer = [mcp multiplicatify:42 with:1337];

dispatch_async([self workQueue], ^{

NSString *prettyThingForUI = [self ingestAnswer:leetAnswer];

dispatch_async(dispatch_get_main_queue(), ^{

[ui show:prettyThingForUI];

})

})

});Well! This looks familiar. Dispatch queue spaghetti. What can we do about that? I decided to use invocation grabbers.

To refresh, NSInvocation is a Foundation class that encapsulates calling a method on an object. It can hold onto a target, a selector, a method signature (description of each argument and return value of a method), every argument of a call, and the return value. They are the building block that enables call forwarding and proxies to work. You can either create them manually, or have one created for you by implementing -forwardInvocation:, where one will be handed to you as an argument. In the latter case, you can use it immediately, but you can also store it and invoke it later.

Creating invocations manually is a hassle. Therefore, it is common to create an “invocation grabber”, that is an object that will save the first unknown call being made on it, and then let the caller use the grabber in some manner. There are many open source grabbers, but this is the one I have:

@interface SPInvocationGrabber : NSObject

-(id)initWithObject:(id)obj stacktraceSaving:(BOOL)saveStack;

@property (readonly, retain, nonatomic) id object;

@property (readonly, retain, nonatomic) NSInvocation *invocation;

...

-(void)invoke; // will release object and invocation

@end

You would use it like so:

id someObject;

SPInvocationGrabber *grabber = [[SPInvocationGrabber alloc] initWithObject:someObject stacktraceSaving:NO];

[grabber doThatThingWith:anArgument];

NSInvocation *invocation = [grabber invocation];You now have an invocation that you can call now, or later, with a reference to ’someObject’, the method ’doThatThingWith:’, and anArgument. You can optionally ask the invocation to retain all the arguments (it’s not done by default to save some overhead in case you use the invocation immediately).

With some NSObject categories, you can have fun with higher-order messaging without closures:

@interface NSObject (SPInvocationGrabbing)

-(id)invokeAfter:(NSTimeInterval)delta;

-(id)inBackground;

-(id)onMain;

@end

// Example: blink

[[self invokeAfter:0.2] setSelected:YES animated:YES]

[[self invokeAfter:0.4] setSelected:NO animated:YES]

// Example: Background computation with UI update later

- ... {

[[self inBackground] somethingExpensive];

}

- (void)somethingExpensive {

...

[[button onMain] setTitle:computedTitle forState:0];

}We can extend this concept to add a category on NSObject to dispatch to its workQueue, given that it’s an SPAgent:

@interface NSObject (SPAgentDo)

- (instancetype)sp_agentAsync;

@end

// Example

- ... {

[[_backendService sp_agentAsync] requestURL:someURL whenDone:^(id _){ ... } callbackQueue:_workQueue];

}This simplifies communication to the agent, but not back: taking the return value of requestURL:whenDone:callbackQueue: wouldn’t make sense, as it’s not actually being called on that line, but being scheduled to be called later. If only we had a concept representing future values…!

sp_agentAsync returns an invocation grabber, but what does the call on the invocation grabber return? An SPTask representing the real return value. Example:

- foo {

SPTask *dataTask = [[_backendService sp_agentAsync] requestURL:someURL];

[dataTask addCallback:^ (NSData *data) {

// Do something with the newly acquired data

} on:_workQueue];

}This also highlights why every SPTask method takes a queue in addition to the callback to be run: We certainly don’t want to run the callback on the work queue of _backendService, and since we’re communicating between agents, we don’t want to call the callback on the main queue. dispatch_get_current_queue() looks inviting, but the documentation states that it should only be used when you are the creator of the queue the call is executed from. Thus, we always need to be explicit about the destination queue.

The implementation of sp_agentAsync is interesting enough to take a closer look at.

@implementation NSObject (SPAgentDo)

- (instancetype)sp_agentAsync

{

SPInvocationGrabber *grabber = [self grabWithoutStacktrace];

__weak SPInvocationGrabber *weakGrabber = grabber;The general use case of invocation grabbers is to use them seldom, therefore I added a stack trace saving feature to them, to aid in finding the origin of bugs. Agents communicate often, so we want to keep the overhead down here.

SPTaskCompletionSource *completionSource = [SPTaskCompletionSource new];

SPTask *task = completionSource.task;

__block void *unsafeTask = (__bridge void *)(task);When you return a Task from a method, the caller of that method shouldn’t be able to decide on the completion state of it: that’s a task for the creator of the task. To hide those method from the Task API, a CompletionSource is used for that purpose.

grabber.afterForwardInvocation = ^{

NSInvocation *invocation = [weakGrabber invocation];We have just now created the grabber, but not returned it from this method, so we don’t know what method it will grab yet. Thus, we need a time in the future when the invocation has been grabbed: that’s what this callback provides us with.

// Let the caller get the result of the invocation as a task.

// Block guarantees lifetime of 'task', so just bridge it here.

BOOL hasObjectReturn = strcmp([invocation.methodSignature methodReturnType], @encode(id)) == 0;

if(hasObjectReturn)

[invocation setReturnValue:&unsafeTask];We only want to return a Task representing the return value if the method we’re wrapping returns a value of object type. If it does, we artificially set the SPTask as the return value of the invocation, which is what provides the caller with the task.

dispatch_async([(id)self workQueue], ^{

#pragma clang diagnostic push

#pragma clang diagnostic ignored "-Warc-retain-cycles"

// "invoke" will break the cycle, and the block must hold on to grabber

// until this moment so that it survives (nothing else is holding the grabber)

[grabber invoke];

#pragma clang diagnostic popDispatch to the work queue of the target, and invoke the grabber’s invocation on that thread. In this weird case we actually want a retain cycle: the grabber should live until the dispatch to the new thread is done. This is similar to how NSTimer retains its target. Invoking the grabber will set its ’afterForwardInvocation’ to nil, thus breaking the cycle and cleaning everything up.

if(hasObjectReturn) {

__unsafe_unretained id result = nil;

[invocation getReturnValue:&result];

[completionSource completeWithValue:result];

}The invocation has now been populated with an actual return value. We can extract it, and complete our SPTask with that value. This value will now be propagated back to the original queue/thread, if a callback was set on the SPTask.

});

};

return grabber;

}

@endAnd we’re done! The grabber is returned to the caller, ready to begin the whole dispatch chain.

We can now go back to the example in the tl;dr and step through it, hopefully with full understanding of what’s going on.

@interface BackendService : NSObject <SPAgent>

- (SPTask*/*<NSData>*/)requestURL:(NSURL*)url;

@end

- (SPTask*)funkifyImageAndPublishResult:(NSURL*)link

{

return [[[[[_backendService sp_agentAsync] requestURL:url] chain] then:^ id (NSData *response) {We have a _backendService, which is an SPAgent. Therefore, we know we can’t just call requestURL directly on it: we need to be on its work queue first. We use sp_agentAsync to do that.

Note that by doing so, the return value is actually SPTask<SPTask<NSData>>; that is, a task which yields a task which yields an NSData. To get to the NSData, we need to wait for the first task to complete. This is a common situation, and thus we can use the convenience method ’chain’. Remember that ’chain:on:’ adds a callback that provides more work to wait for. ’chain’ is a shorthand for ’…] chain:^ (SPTask *task) { return task; } on:_workQueue]’. We’re saying, “the task yields a task. Just give me the latter when it’s ready”.

UIImage *image = [UIImage imageFromData:response];

return [image funkyTransform];

} on:dispatch_get_global_queue(0,0)] chain:^ SPTask*(UIImage *image) {The data has arrived. We’re on a global background queue, so we can do tons of expensive work here without affecting anyone. Once we’re done doing our work, chain it together with a new task that…

return [_imageHostingService postImageAndReturnImageURL:image];

} on:_imageHostingService.workQueue];

sends our funky image to an image hosting service, which we also have an agent service for. -[postImage…] returns an SPTask, which we will wait for. We can return this final task to the caller of ’funkifyImage…’, and it will represent the entire chain of actions. In addition, the task will yield the URL of the image when hosted at that service. Finally, we don’t have to handle any errors in this chain: the caller can add an errback to the last task in the chain, and it can thereby handle any error in any part of the chain.

}

Fin. I’ve packaged the two classes in a Github project called SPAsync. Feel free to fork, comment, or contact me personally!

Thanks to Dan and Mattias for the feedback while I was writing this article!

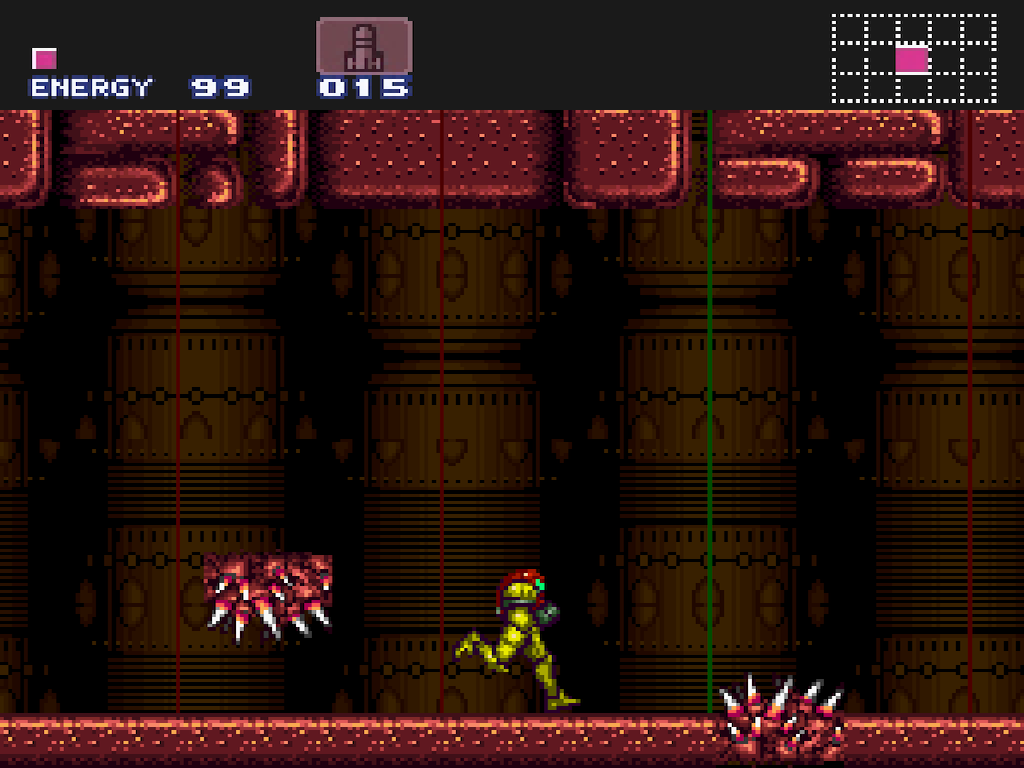

During the Music Hack Day Stockholm 2013 hackathon, me and mandy forked Deathtroid into Super Mutroid (full source code), added EchoNest and Spotify support, and made a Metroid music game.

You can download it here (requires Mac OS 10.8): Download from cl.ly

From the Hack project page:

Music/rhythm platform game with a Super Metroid theme

Description

Plays a song through Libspotify and downloads song data from EchoNest. Generates obstacles based on the beats in the song.

How to play

- W - Jump

- S - Duck

When you start the game, enter a player name to the left and your Spotify login details to the right. You can also enter a Spotify track url in the text field under the Login button.

Then just press “Create Game” and wait for the song to load! (The character will automatically start running when the song has been loaded. If nothing happens after several seconds, the song you entered probably isn’t available on EchoNest.)

You die if the character runs into the spikes. If that happens, press any key to restart. If you want to enter another track url, you have to quit the game and restart it.

Known bugs

- Sometimes crashes when you press Create Game

- Flickering character

- You can jump through the spikes that you are supposed to duck under

The idea of writing iOS apps using HTML makes me mad. It infuriates me. This is a strange thing, and generally a sign that I should cool down, breathe deeply, and just ignore the subject until it’s been simmering in my brain long enough for me to think clearly about it. But it’s been years, now! How much breathing can a man take?

It’s so slow! And ugly! And inelegant! I either need a few absolute-positioned elements, or a layout that I’ll need to write code for anyway. Why would I involve a super-complex layout engine for that? Why do I want a document-based model conceived in the nineties for my playback UI? Why do I want an engine where a single missing “return NO” will make my button completely wipe out all existing state in my app and effectively restart it? One where loading is so slow that it’s designed to always be progressive, flickering like an Atari while slowly putting UI controls on my screen?

Argument: HTML will be fast enough soon.

Counter-argument: And by then native will be even faster.

Inner, loud voice: Oh yeah? I bet this will be the year of Linux on the desktop too.

Digression: Whatever computer speed we reach, we will find ways to use that power to make better UIs. The idea that an inefficient platform can “catch up” because there is some kind of level where you don’t need more processing power to make a great UI is a fallacy. Remember when floating-point animations with multiple transparent layers was expensive? If you are using the above argument, you’re the guy who argued that they’re a waste of cycles and don’t make a UI better.

Argument: HTML will have all the features native apps have soon.

Counter-argument: And by then native will have more features, some of which will be untranslatable or very hard to translate to a web environment.

Inner, loud voice: Draaag and droooppppp arrrrgggghhh

Argument: The reason every native-app-with-web-UI sucks is because everyone else sucks. We can do it correctly.

Counter-argument: Really? Facebook just don’t have good web developers? I don’t buy that.

Inner, loud voice: No, YOU suck! Nngggghhhh

Argument: A-B testing, dude! Fast deployments!

Counter-argument: You’ve made a product change and agreed on all the design, figured out what to test and how to gather good data, implemented it, and got it through QA in less than a week? Maybe you need a web view. Actual, existing people: you can A-B test in any environment, whether a web browser is involved doesn’t matter, and you probably have time to wait a week.

Please flame me and prove me wrong. I’ve seen so many shit HTML apps, but maybe everybody really just is doing it wrong. And you’re right, I’m not a web developer, my javascript is shit, I haven’t even built a web page in a year, and I make a living writing ObjC. Of course I’m biased. Still, no ad-hominems please.

And you! You there staring at me angrily, just barely not shouting at me: yes of course some sort of insta-deployment web-ish platform is going to be amazing and take over the world, some time in the next decade. But it won’t be HTML5. Possibly it will be its grand-grand child platform, which will bear little resemblance to the original.

I had completely forgotten about nil targeted actions. BigZaphod reminds me of the canonical way of dismissing the keyboard:

[[UIApplication sharedApplication] sendAction:@selector(resignFirstResponder) to:nil from:nil forEvent:nil];The responder chain is so under-used in iOS. I’ll definitely try to think of ways to solve problems using it in the future.

For those of you who haven’t coded for the Mac, nil-targeted actions are what makes menus (among many things) work. When you select “Copy” from the menu, this is sent to the first responder. If that happens to be a text field, that text field gets the chance to put things into the clipboard. If it had been a check box, the check box would have no idea how to copy. However, that check box might be in a window, which belongs to a NSDocumentController, and the document might have the concept of copying the document as a whole. The app would step through the responder chain for you, from the check box all the way to the document controller, and perform the copy. Magic!

If you have every touched C++, Java or C# and then moved to Objective-C, you have at some point written one of the following in a source file:

NSArray/*<MyThing>*/ *_queuedThings;

NSDictionary *_thingMap; // contains MyThing

-(MAFuture*)fetchThing; // wraps a MyThing

With TCTypeSafety, you can write pretend that you’re writing in a language with generics and write:

@interface MyThing : NSObject

...

@end

TCMakeTypeSafe(MyThing)

(later...)

NSArray<MyThing> _queuedThings;Assume that we have some kind of Future class. Assume also that we have a factory that returns Futures that wrap the asynchronous creation of a MyThing. We might want to define the interface for such a factory like this:

@interface MyThingFactory : NSObject

- (TCFuture<MyThing> *)fetchLatestThing;

@end

Later, when we use the factory:

MyThingFactory *fac = [MyThingFactory new];

NSString *thing = [fac fetchLatestThing].typedObject;

… actually generates a compiler warning, since typedObject returns MyThing, not NSString. (Note that we did not have to give TCFuture knowledge of the MyThing type to get this benefit, or modify it in any way except make it TCTypeSafety compatible).

This way, we can ensure that even though we have wrapped our MyThings in a TCFuture, we haven’t thrown away type safety, so that if we want to change the return type of fetchLatestThing, we can just do so in the header and then fix all the compiler warnings, rather than going through every single usage of fetchLatestThing and fix any now invalid assumptions on the return type.

This is also useful for collections such as arrays and dictionaries:

NSMutableArray<MyThing> *typedArray = (id)[NSMutableArray new];

[typedArray insertTypedObject:@"Not a MyThing" atIndex:0]; // compiler warning! NSString ≠ MyThing

NSNumber *last = typedArray.lastTypedObject; // compiler warning! NSNumber ≠ MyThing

NSLog(@"Last typed thing: %@", last);

The syntax for indicating protocol conformance of a variable is the same that you would use for template/generics specialization in other language. Also, the namespace for protocols is separate from the namespace for classes, so we can have a protocol with the same name as a class. So if we implement a protocol with getters and setters that take and return the type that we are interested in, we can get type safety. For example, we can create the protocol MyThing as such:

@protocol MyThing

- (MyThing*)typedObjectAtIndex:(NSUInteger)index;

- (void)addTypedObject:(MyThing*)thing;

@endWe then need to add support for these methods to NSArray and NSMutableArray. However, the type we are specializing on is only a compile time hint and does not affect the type of the instance, so in the implementation, we can just say that these return ‘id’.

@implementation NSArray (SPTypeSafety)

- (id)typedObjectAtIndex:(NSUInteger)index;

{

return [self objectAtIndex:index];

}

@end

@implementation NSMutableArray (SPTypeSafety)

- (void)addTypedObject:(id)thing;

{

[self addObject:thing];

}

@endTada! Write a macro that generates this protocol for you (like, say, TCMakeTypeSafe), and you have instant type safety.

Absolutely. You can only “specialize” on a single class: you can’t create some generic facility that would let you specialize both the key and the value of an NSDictionary. You have to use weird selectors such as -[NSArray typedObjectAtIndex:], since the protocol conformance sadly does not override the method signature for your array instance, and using -[NSArray objectAtIndex:] will still give you type-unsafe return values.

Worst of all, by applying the TCMakeTypeSafe macro on your class, it will suddenly look like it has the interface of both a to-one accessor, NSArray, NSDictionary, and whatever else you add support for in TCMakeTypeSafe. Thus, you wouldn’t get compile time warnings if you changed your NSArray into an NSDictionary, as you normally would. I think this trade-off is worth it, but I’m not sure.

At nevyn/TCTypeSafety at GitHub.

Update: turns out Jonathan Sterling had almost exactly the same idea only a few days ago.

We’re still writing code as if on a teletype, and still feeding it to a black-box compiler, and getting magic out. There must be a more visual approach, that’s faster to code in than ascii, more succinct, giving you a better overview, an IDE that talks to you. I’ve been hunting for a visual programming language for a long time, as you probably know. Heck, I even wrote one (no, that’s not Quartz Composer).

So of course I had to back Light Table when I saw it. I can’t but feel that Chris Granger’s project is overambitious and too broad to build something general and practically useful, but it’ll be a very interesting journey.

While researching Light Table, I ran into Jonathan Edwards’ thoughts on it, “An IDE is not enough”. He’s been working on a visual programming language of his own, called Subtext. He’s chosen a very specific problem—conditionals—and extrapolated a language from that. I’m really not sold on his visual representation, nor the incredibly mouse and context menu-heavy interface, but I really like the idea and the thoughts behind it. While waiting for the revolution, give your brain something to chew on with the embedded video below the quote.

Much of the design of our programming languages is an artifact of the linear nature of text.

The hackbook project is alive! Or at least limping around. Finally got myself a cheap pair of Vuzix Wrap 920 head-mounted displays. Wearable computer + monitor, check! The stereo vision in them is horrible, but I only want to use one of the screens so that I can see my surroundings anyway, and on its own one of those screens is actually pretty decent. Haven’t dared to open them up further to see if one of the monitors can be detached yet, tough… Don’t have any input devices yet, either.

Fantastic and beautiful conclusion to Everything is a Remix, by Kirby Ferguson.

The above presentation by Bret Victor is amazing, and I couldn’t stop tweeting about it the other day. My mind was blown at:

4:20. cool! I mean, I’ve seen live editors before, but I never thought of actually using sliders on numbers. Clever! I remember now though, that this has been done by Codea (and probably others) before.

5:25. What. That changes the way I think about programming visual things. I keep thinking of all the times I’ve tweaked an animation or gradient, recompiled, redeployed to device, and get to experience my change maybe a full minute later. How many solutions have I missed, had I just been able to drag a slider to try variations?

I’ve tried to come up with solutions to this problem several times before. My first try was RemoteParameter. However, it is incredibly clumsy to integrate into the code being modified: modified variable must be KVO compliant, it only works for a single spot in the code (so it will fail if you’re e g modifying the background color for all cells in a list), and the editor UI is atrociously bad.

My second try was with NuRemoter. This tool I still actually use, and is somewhat useful in working with living code (sending calls in the middle of animations, debugging time sensitive operations, etc). However, it is not at all visual, and any code I improvise in the NuRemoter UI will be in Nu, which I can’t use as-is in my project without rewriting it into ObjC. I have a few thoughts here, though: first off, I should make it easier to hook Nu code into our code base, so that things that are naturally expressed in a script-y langage, can be. Secondly, the NuRemote protocol can be used as a payload delivery system for other tools. For example, if I ever write an animation construction kit, I could use NuRemoter to upload and inject animation descriptions into the app, and immediately try them out. (I’ve thought of integrating it into my Localizer localization app, too).

Wait, animation construction kit? Well, Core Animation is incredibly powerful and easy to use, but expressing animations in code is both unintuitive and slow (see: write/compile/deploy/test cycle), and maybe tobi is a better animator than me? I want something like the GLSL Shader editor: define input parameters (perhaps start and end locations, duration, intensity) and a sample image as a placeholder for the UIView being animated, and then define animations Keynote-style, possibly with values substituted for parameters. Output a json file, load it at runtime and use it instead of all the animation code. Shouldn’t be THAT hard to build?

6:10. My jaw drops just a tiny bit further, but enough to mark the time. Well, typing autocompletions into the autopreviewing document so that they run automatically is pretty obvious, but I love the idea of showing you what the thing does, rather than having you read documentation. Maybe the right sidebar in Xcode should have movies instead of help snippets?

7:43 and 8:12. How the hell did he do that‽

11:30. Again, how did he do that‽ He’s obviously not re-evaluating the whole file on each change. Maybe he’s been careful when writing the file so that it can be re-evaluated in the context of the currently “running” web page/js-vm? It looks like an incredibly nice way to code games, though. A little bit like Unity I guess; I remember having my mind blown the first time I saw that editor, too.

13:00 and 14:10. Now he’s just showing off. Surely this editor must be specifically written for this game engine he has constructed, and not something generic. Still want that code+editor, though!

Around 16:00 he re-iterates again how easy it is to be creative when you can visualize your results live, and how it doesn’t just apply to visuals or animations, but entire gameplay ideas. I’m so very sold on the idea.

Around 20:00, I really love the idea of supplying sample input data and see how that affects the code you just wrote.

Around 26:00, I should’ve realized that this is the same guy as the one who made That Math Video that blew my mind pretty thoroughly last year. I didn’t, until I checked his web site in preparation for this blog entry. He has plenty of new interesting entries there, if you haven’t checked them out lately.

However, out of all of these, the moment that perhaps isn’t the most interesting to me as a programmer, but never the less tipped me over from “yes, I am impressed” into a squealing, giggling pile of fanboyism, is 31:30. Probably the most amazing animation editor I’ve seen, and probably the most intuitive power user UI I’ve seen, and it’s just an experiment he made? MIND BLOWN.

I love how, instead of trying to solve the problem of tiny hit targets with giant thumbs, he has the user select the layer with her left hand, while manipulating it with her right. It’s a touch surface, and the user has two hands: why is nobody else using this fact‽ (my mind was similarly blown in ‘05 by the same multi-hand usage by TactaPad, which sadly never became an actual product).

Compositing all the different animations the user wants to get into the scene by just scrolling back time and immediately performing the next animation is equally genius (also seen, but not at all as well implemented, in Garageband.). Just look at the amazing speed at which he composes that scene!

However, out of all the amazing things Bret is showing us, none are as inspiring as his outro. Ever since I started programming, I’ve felt an idea or principle just at the edge of my consciousness. I know it’s related to creating a user interaction paradigm that is more natural, but I can’t describe it more clearly than that. I just know, for example, that multitouch is a step in the right direction. I haven’t thought about it too much in the past two years as I’ve been busy at Spotify, and Bret’s note at the end that doing only one thing will not give you insights really hit home.

Alright, enough gushing. I hope that after all that, you did actually watch the video, and that you found as much inspiration as I did. Right now, I just want to stop everything I’m doing and take a few months off life and make tools. I should probably finish a few projects first though…